Exercise 11.1.2.

Statistics for Ecologists (Edition 2) Exercise 11.1.2

These notes supplement Chapter 11 and explore the use of beta coefficients, which can be a useful addition to a regression analysis.

Beta coefficients from linear models

Introduction

In linear regression your aim is to describe the data in terms of a (relatively) simple equation. The simplest form of regression is between two variables:

y = mx + c

In the equation y represents the response variable and x is a single predictor variable. The slope, m, and the intercept, c, are known as coefficients. If you know the values of these coefficients then you can plug them into the formula for values of x, the predictor, and produce a value for the response.

In multiple regression you “extend” the formula to obtain coefficients for each of the predictors.

y = m1.x1 + m2.x2 + m3.x3 + ... + c

If you standardize the coefficients (using standard deviation of response and predictor) you can compare coefficients against one another, as they effectively assume the same units/scale.

The functions for computing beta coefficients are not built-in to R. In these notes you’ll see some custom R commands that allow you to get the beta coefficients easily. You can download the Beta coeff calc.R file and use it how you like.

What are beta coefficients?

Beta coefficients are regression coefficients (analogous to the slope in a simple regression/correlation) that are standardized against one another. This standardization means that they are “on the same scale”, or have the same units, which allows you to compare the magnitude of their effects directly.

Beta coefficients from correlation

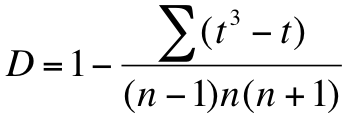

It is possible to calculate beta coefficients more or less directly, if you have the correlation coefficient, r, between the various components:

Calculating beta coefficients from correlation coefficients.

The subscripts can be confusing but essentially you can use a similar formula for the different combinations of variables.

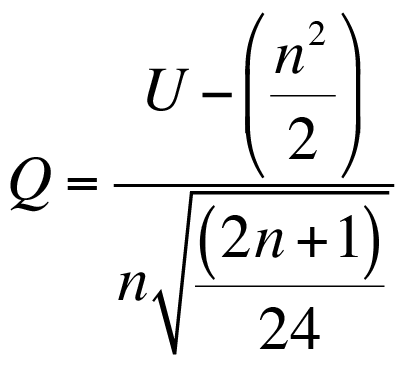

Beta coefficients from regression coefficients

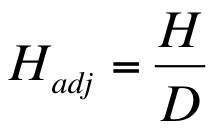

In most cases you’ll have calculated the regression coefficients (slope, intercept) and you can use these, along with standard deviation, to calculate the beta coefficients.

Calculating a beta coefficient from a regression coefficient and standard deviation.

This formula is a lot easier to understand: b’ is the beta coefficient, b is the standard regression coefficient. The x and y refer to the predictor and response variables. You therefore take the standard deviation of the predictor variable, divide by the standard deviation of the response and multiply by the regression coefficient for the predictor under consideration.

There are no built-in functions that will calculate the beta coefficients for you, so I wrote one myself. The command(s) are easy to run/use and I’ve annotated the script/code fairly heavily so it should be useful/helpful for you to see what’s happening.

The beta.coef() command

I wrote the beta.coef() command to calculate beta coefficients from lm() result objects. There are also print and summary functions that help view the results. Here’s a brief overview:

beta.coef(model, digits = 7) |

|

print.beta.coef(object, digits = 7) |

|

summary.beta.coef(object, digits = 7) |

|

| model | A regression model, the result from lm(). |

| object | The result of a beta.coef() command. |

| digits = 7 | The number of digits to display, defaults to 7. |

The beta.coef() command produces a result with a custom class beta.coef. The print() and summary() commands will use this class to display the coefficients or produce a more comprehensive summary (compares the regular regression coefficients and the beta).

Using beta.coef()

You will need the result of a linear regression, usually this will be one with the class “lm”.

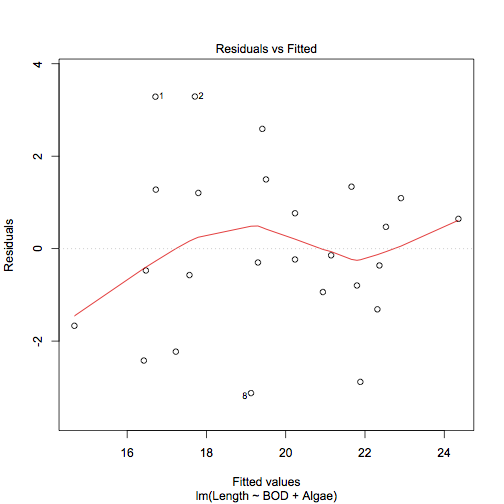

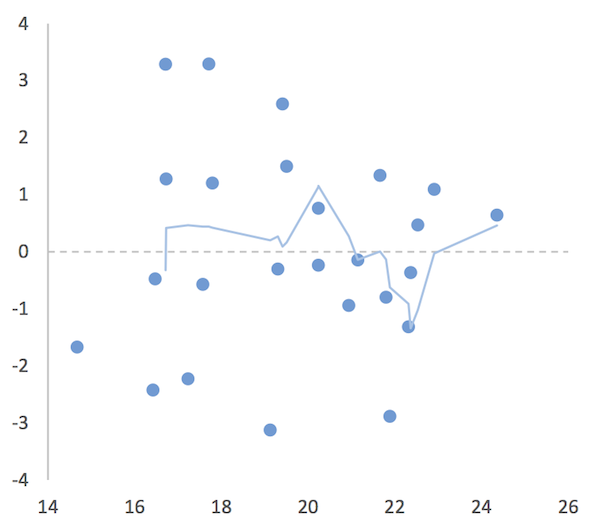

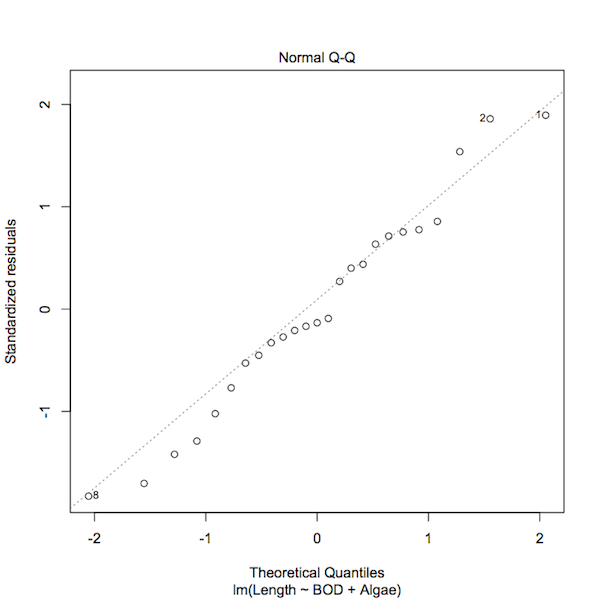

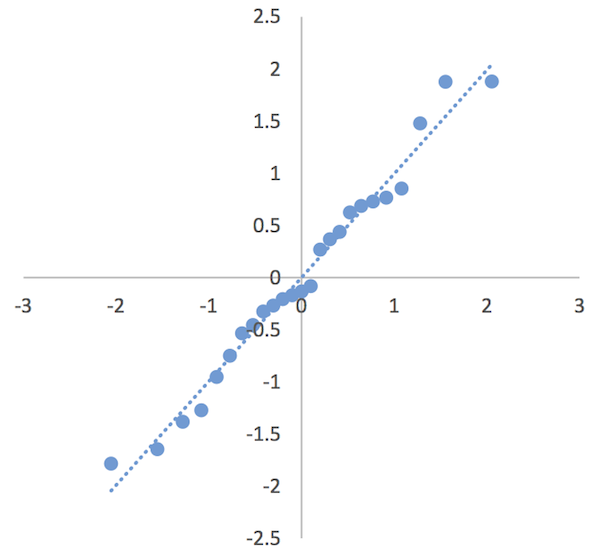

mf.lm <- lm(Length ~ BOD + Algae, data = mf)

summary(mf.lm)

Call:

lm(formula = Length ~ BOD + Algae, data = mf)

Residuals:

Min 1Q Median 3Q Max

-3.1246 -0.9384 -0.2342 1.2049 3.2908

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 22.34681 4.09862 5.452 1.78e-05 ***

BOD -0.03779 0.01517 -2.492 0.0207 *

Algae 0.04809 0.03504 1.373 0.1837

Signif. codes: 0 ‘’ 0.001 ‘’ 0.01 ‘’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 1.83 on 22 degrees of freedom Multiple R-squared: 0.6766, Adjusted R-squared: 0.6472 F-statistic: 23.01 on 2 and 22 DF, p-value: 4.046e-06

Once you have the result you can use the beta.coef() command to compute the beta coefficients:

mf.bc <- beta.coef(mf.lm)

Beta Coefficients for: mf.lm

BOD Algae

Beta.Coef -0.5514277 0.3037675

Note that the result is shown even though the result was assigned to a named object.

Print beta.coef results

You can use the print method to display the coefficients, setting the number of digits to display:

print(mf.bc, digits = 4)

Beta coefficients:

BOD Algae

Beta.Coef -0.5514 0.3038

The command takes the $beta.coef component from the beta.coef() result object.

Summary beta.coef results

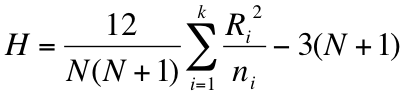

The summary method produces a result that compares the regular regression coefficients and the standardized ones (the beta coefficients). You can set the number of digits to display using the digits parameter:

summary(mf.bc, digits = 5)

Beta coefficients and lm() model summary.

Model call:

lm(formula = Length ~ BOD + Algae, data = mf)

(Intercept) BOD Algae

Coef 22.347 -0.037788 0.048094

Beta.Coef NA -0.551428 0.303768

The command returns the model call as a reminder of the model.

Add the commands to your version of R

To access the commands, you could copy/paste the code from Beta coeff calc.R. Alternatively, you can download the file and use:

source("Beta coeff calc.R")

As long as the file is in the working directory. Alternatively, on Windows or Mac use:

source(file.choose())

The file.choose() part opens a browser-like window, allowing you to select the file. Once loaded the new commands will be visible if you type ls().

The lm.beta package

After I had written the code to calculate the beta coefficients I discovered the lm.beta package on the CRAN repository.

Install the package:

install.packages("lm.beta")

The package includes the command lm.beta() which calculates beta coefficients. The command differs from my code in that it adds the standardized coefficients (beta coefficients) to the regression model. The package commands also allow computation of beta coefficients for interaction terms.

Use the command:

help(lm.beta)

to get more complete documentation once you have the package installed and running. You can also view the code directly (there is no annotation).

lm.beta print.lm.beta summary.lm.beta

The lm.beta() command produces a result with two classes, the original “lm” and “lm.beta”.